LLMs Calling LLMs: Building AI Agents with Apple's Foundation Models and Tool Calling

Explore going from prompts to multi-agent architecture by leveraging Foundation Models tool calling capabilities and exposing LLMs themselves as tools

If you follow the ever-changing AI space, you might have noticed that there's a lot of discussion around agents. Between the hype and the more grounded academic world, it's a bit difficult to find a clear definition of what AI agents are.

One possible definition that is relatively accepted is that an agent is an LLM running tools in a loop and possibly working with other LLMs to achieve a goal.

Like many powerful ideas, it's very simple at its core. But you'll see that it can scale to advanced use cases.

This is a guest post by Tarek M. Ben Lechhab, indie Apple developer, freelancer, and AI enthusiast, feel free to take a look at his publication.

Apple's Foundation Models framework is an excellent candidate for experiments in this space.

Indeed it provides very solid tool calling capabilities, and the limited context window, (only 4096 tokens input and output combined), can greatly benefit from delegating tasks in order to multiply the total number of tokens available to do some work.

There are many ways agents are used today. We are going to focus on one approach: the plan and execute design pattern with a bit of orchestration.

Used wisely, those can greatly expand the capabilities of your AI-enabled app.

We will leverage tool calling in order to exhibit agentic behavior.

But first, let's take a look at tool calling in Foundation Models.

Use the Tool, Luke

I'm only half joking with this reference. Indeed, like the Force, the tool calling (or function calling) capability of LLMs is very powerful. In the LLM world,a tool is a piece of code provided beforehand that can be called by your LLM at its own discretion.

Instead of relying on a set of deterministic steps to achieve a result, you pass a tool to the LLM and let it decide if, when, and how it should call it.

The Foundation Models implementation is top notch in its own category, especially from an API design standpoint. I'm quite impressed with how much one can achieve with it, given the small size of the only currently available model.

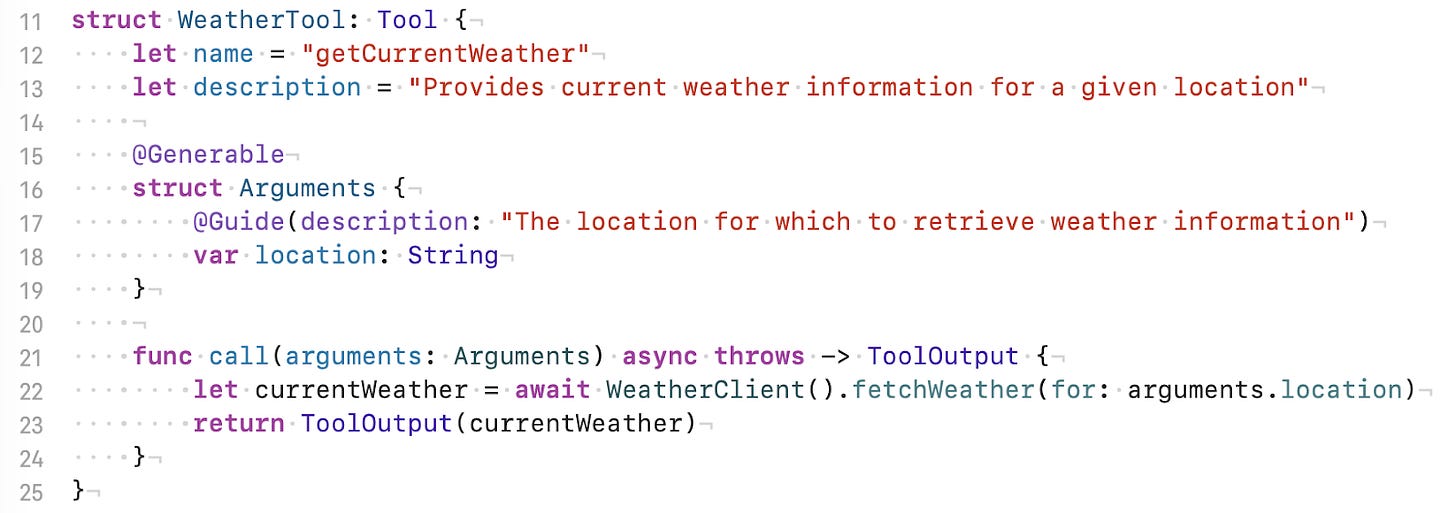

In order for the LLM to use your tools, you first declare a tool conforming to the Tool protocol.

This involves:

Naming the tool

Providing a description. This is like a mini-prompt for the LLM to understand what this tool does. Based of this mini-prompt, the LLM will determine if, when, and how it should use the tool.

Defining its arguments using @Generable. Those are the parameters that the LLM must pass in order to invoke your tool. They will be dynamically generated by the LLM.

Implementing the call method which is the action to execute when this tool is called. This method is `async`, so you can definitely do some background or network work, assuming you stay within the timeout limit of the LLM.

Returning the output, wrapped in a ToolOutput. By default it can take a string or generated content (which will be useful later)

Here's a simple example for the traditional weather tool:

Then, you just pass an instance of that tool to your LanguageModelSession during instantiation, and you're good to go!

It's up to the LLM to decide whether or not it should call the tool, when it should call it, and with which parameters.

For long-time programmers, this might sound scary, since there is a lot of non determinism here. but when it works well (and it usually works well), it's really powerful.

You are essentially programming your computer in natural language and delegating a good chunk of the decision making process.

In some sense, LLMs can be seen as a new emerging core capability of computers, and programming them with natural language as a new programming paradigm. This idea was developed by Andrej Karpathy in a talk that I can only strongly encourage you to watch.

We are still at the phase of interacting through the command line with those LLMs, as it was done in the 60s with traditional computers.

I can't even begin to imagine how it will look in the future if it evolves from the CLI to other ways of interacting!

Planning and Executing

Now that we've seen the basics of a tool, let's turn it up a notch.

Like traditional programming, this new kind of programming has design patterns.

One of these design patterns is planning and executing.

One could think a way to achieve planning and executing would be to write an advanced prompt.

We could instruct for instance the LLM to first make an extensive plan and then execute this plan.

While that might work for some cases, this approach would be pretty limited:

First because the context window is short, which means that the planning tokens will count toward the global 4096 input + output limit, not to mention the extensive prompt tokens.

Second, because a small 4-billion-parameter LLM like this one is probably not trained for planning and execution (the same goes for reasoning)

A much better way (and the standard way) to do it is by adapting our architecture to have agents interacting with each other.

I've put an LLM in your LLM

Remember the definition of an agent that we went with earlier? An LLM working with tools and other LLMs.

This is exactly what we'll experiment with: What if we exposed other LLMs to our root LLM through the tool API? That way, an LLM has the capability of calling another LLM (which is seen as a tool) when necessary.

Each LLM is an instance of a LanguageModelSession, we thus have multiple windows of 4096 tokens to work with.

Let's take a look at the architecture of what we're building:

Think of it like a restaurant:

The Coordinator which is the head chef, decides which dish to make

The Planner writes the recipe

Workers are the line cooks

The Coordinator takes back the work from cooks and plates the final dish 🍽️

There is a lot of code involved as you can imagine. I can only strongly encourage you to follow along in the sample project.

Let's take a look at the main pieces.

Breaking down the architecture

1. We instantiate a root Coordinator LLM

Here are its instructions (or system prompt)

Remark: You’ll notice that I had to explain to the coordinator to keep the order right and how to do it. At some point, since the worker tasks were running in parallel, and the order in which they returned wasn't predictable, the final output was out of order. Classic concurrency bug fixed by a simple human sentence! We wish all bugs could be fixed like that!

And its prompt:

2. We give it a set of tools, namely the Planner and the Worker which are themselves instances of LLMs

3. The Coordinator passes the user's request to the Planner using the tool's Arguments (leveraging @Generable).

4. Then, in the call method, we instantiate a new session of the LLM to handle the planning request. The Planner decomposes the user request into subtasks, the Coordinator gets them.

5. The Coordinator spawns one Worker per subtask, to execute it

6. The Coordinator gathers all the Workers results, combines them and answers the user

Why This Matters

Having such a decomposition unlocks capabilities that would be harder, or sometimes impossible to achieve with just one LLM and one prompt:

Each tool (especially workers) can reason about its task, in its own 4096-token playground.

Tools can handle ambiguity and edge cases of their own subtasks.

Tools can provide explanations for their actions, even if it goes wrong.

Ultimately, it enables emergent behaviors, although I admit that with a model this small it's seldom the case.

Taking our agents for a test run

Alright, it's time to take our shiny new toy for a spin.

Ever wanted to learn about quantum computing? Now is the chance.

Let's go with the very basic prompt: “What is quantum computing?”

Normal Mode

Here is one possible output from the normal mode:

Quantum computing is a cutting-edge field of computer science that leverages the principles of quantum mechanics to process and solve complex problems more efficiently than classical computers. Unlike traditional computers, which use bits as the smallest unit of data represented as either 0 or 1, quantum computers use quantum bits, or qubits. Qubits can exist in multiple states simultaneously due to a phenomenon known as superposition, allowing quantum computers to process a vast amount of possibilities at once.

Another key concept in quantum computing is entanglement, where qubits become interconnected in such a way that the state of one qubit can depend on the state of another, regardless of the distance between them. This interconnectedness enables quantum computers to perform certain calculations much faster than classical computers.

Quantum computing has the potential to revolutionize fields such as cryptography, optimization, materials science, and complex system simulations by tackling problems that are currently intractable for classical computers. However, building practical quantum computers remains a significant challenge due to issues like qubit stability and error rates.Agentic Mode

Let's try it with the agentic architecture now:

The coordinator receives the following prompt:

Process the following task using the available tools:

User Task: What is quantum computing? Then it passes this prompt to the worker (notice that it generated the maximum number of subtasks itself based on the range guide we provided):

Please analyze and break down the following task into subtasks:

Task: What is quantum computing?

Maximum subtasks: 5

Requirements:

1. Create logical, actionable subtasks

2. Identify dependencies between subtasks

3. Ensure subtasks are specific and measurable

4. Consider the given context and constraints

Provide a comprehensive task plan with a clear strategy.Here is the planner's decomposition:

Task Plan:

Original Task: What is quantum computing?

Strategy: To effectively breakdown the task of 'What is quantum computing?', I will systematically research foundational concepts and theories, trace the historical evolution of quantum computing, assess its potential applications, and compile these insights into a coherent summary. Each subtask builds upon the previous ones, ensuring a logical progression from theoretical understanding to practical implications.

Subtasks (5):

1. [1] Research foundational concepts of quantum computing.

2. [2] Identify key theories and principles underlying quantum computing.

3. [3] Explore historical development and milestones in quantum computing.

4. [4] Analyze applications and potential impacts of quantum computing.

5. [5] Summarize findings and prepare a structured explanation of quantum computing.The coordinator assigns each of the five subtasks to a worker, spawned in parallel. (not shown here for conciseness)

Then it gathers the findings of each worker, and combines them into the final result sent to the user:

Here's a structured breakdown of quantum computing based on the subtasks:

1. **Research Foundational Concepts of Quantum Computing:**

- Quantum computing uses principles like superposition and entanglement.

- Key components include qubits, quantum gates, and quantum algorithms.

2. **Identify Key Theories and Principles Underlying Quantum Computing:**

- **Quantum Superposition**: Allows qubits to exist in multiple states simultaneously.

- **Quantum Entanglement**: Links qubits for complex computations.

- **Quantum Tunneling**: Enables particles to pass through potential barriers.

- **Quantum Interference**: Enhances signal amplification and cancellation.

- **Quantum Decoherence**: Addresses the loss of quantum states due to environmental interaction.

3. **Explore Historical Development and Milestones in Quantum Computing:**

- Quantum computing began in the mid-20th century.

- Key milestones include the development of quantum gates in the 1980s and the first quantum computers in the 1990s.

- IBM's 2019 announcement of quantum advantage marked a significant breakthrough.

4. **Analyze Applications and Potential Impacts of Quantum Computing:**

- Quantum computing could revolutionize cryptography by breaking current encryption standards.

- In materials science, it could enhance drug discovery and material optimization.

- AI benefits from increased parallelism, improving machine learning capabilities.

5. **Summarize Findings and Prepare a Structured Explanation of Quantum Computing:**

- Quantum computing offers unparalleled computational power through superposition and entanglement.

- It holds transformative potential across industries, promising breakthroughs in cryptography, materials science, and artificial intelligence.

This structured approach ensures a logical understanding of quantum computing from foundational concepts to its wide-reaching applications.We can see that the final output from the agentic version is much more terse and elaborated than what a single prompt can usually do.

This is because we expanded the working tokens by multiplying the context windows, dispatched in separate tasks, allowing parallel and specialized work.

By providing additional tools for specific tasks to the worker tools, I believe this can scale quite well and provide real value.

Limitations

Hallucinations

While this architecture is powerful in theory, you should still keep in mind that the only currently available model is very small. So it can quickly go off rails and hallucinate.

For instance, if you ask for real world information, such as the weather, the task decomposition will be just right, but since the workers don't find tools to actually get the location and the weather for that location, it just hallucinates that it does so, or tries to fill it with last know information from training.

In a production app, the results of such an architecture will only be as good as the tests you conducted to ensure that they fit your specific needs.

I encourage you to try it, the way tasks are decomposed and executed is instructive to understand the internals of the model.

Specialized synthesizer or not?

I want to mention that an initial version of this architecture had a synthesizer tool called after the workers in order to combine the work.

However, it was fragile and the coordinator seemed often confused and tried to re-interpret those results.

This is typically the kind of limitations that probably wouldn't appear in a bigger model since it would have enough power and tokens to deal with this kind of ambiguity.

So I removed it, the Coordinator knows “naturally” how to do the synthesizing job.

Technical details

There are a few technical details worth mentioning.

Splitting instructions

I have had much better results splitting my explanations to the LLM instance between the instructions and the actual prompt. Apple engineers say that the model is specifically trained to follow instructions over prompt. So one could expect to be able to say everything in the instructions and keep the prompt minimal, but at the time of this writing, it doesn't always work well.

Mapping inputs and outputs

One key element to make this architecture work is to ensure that when tools are supposed to be chained together, the output of the previous tool has to match the input of the next one.

For instance, the coordinator is supposed to call a worker with a subtask produced from the planner.

Here's how that translates:

You can see that the planner outputs an array of subtasks, and the worker takes one subtask. Once again, the LLM figures out the mapping automatically. 🤯

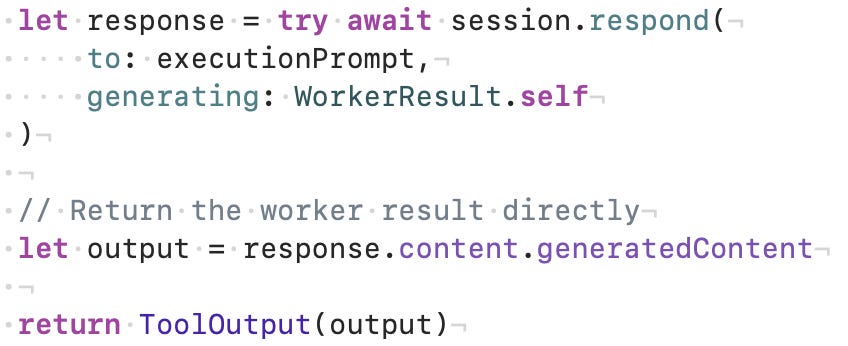

Head-scratcher: avoid guided generation with tool calling!

It took me a long while to figure this one out. I don't know if it's a bug, a limitation of the system, or just a quirk but there is a critical detail to ensure the model proceeds with tool calling.

The root or coordinator model mustn't have any “generating” type when using tool calling, at least with this architecture. It means, you shouldn't call it with session.respond(to: prompt, generating: FinalAnswer.self) for instance.

During my tests, I absolutely had to remove any generating type from the respond(to:) call, which means that ultimately it's probably just like calling it with session.respond(to: prompt, generating: String.self).

Otherwise, it would just be very inconsistent in calling the passed tools and often tried to fulfill the request itself, especially with multi-step tool calling.

I guess there's something that prevents an advanced use case such as this one from working with guided generation.

I might be wrong, but my suspicion is that guided generation seems to be baked in the model, probably during training time, and that it conflicts with the model roaming free with tool use and generating a simple String. (once again, the size of the model is probably the culprit here)

Prompt engineering

Crafting a good system prompt (instructions) and user prompts goes a long way to make the model do exactly what we want it to.

Once again, this is the interface we have to program the LLM, so finding the right words and formulation is a discipline in and of itself.

I found best results with the usual prompt engineering best practices such as using a lot of imperative language.

However, counter intuitively, I found best results by not explicitly naming the tools in the instructions and prompt. For some reason, when doing so it wasn't making the connection between the actual tools and the string representing those tools in the prompt, and consequently it would lay down its plan to do it, if it had tools at its disposal with those names. 🙄

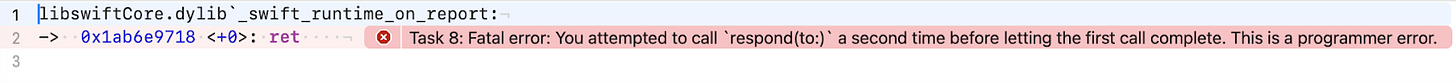

Session Management

⚠️Warning: One also has to be careful not to call `respond(to:)` on a LanguageModelSession again before it has returned. Otherwise, it crashes, so be careful in your production apps!

Also, keep in mind that while you're expanding capabilities by using many sessions, with each one of them having a context window of 4096 tokens, you still have to find ways to not overflow the root session context window.

After all, this session will manage the inputs and outputs of all child sessions, so it can quickly blow out of proportions.

For now I've just kept it simple, but in theory, there are many ways to mitigate this, such as using a sliding window, chained summarization, external memory etc.

Recursion Limitation

Initially, I wanted to go even further, I wanted to give the Worker tool / LLM an instance of itself, so it could recursively delegate tasks.

Unfortunately I encountered two issues:

First, it seems like there is a built-in limitation preventing the result from referencing itself (self-referential structs with @Generable). I was even able to uncover that Apple engineers are using a force try! inside the Foundation Models code. 😄

FoundationModels/SchemaAugmentor.swift:209: Fatal error: 'try!' expression unexpectedly raised an error: FoundationModels.GenerationSchema.SchemaError.undefinedReferences(schema: Optional("SafeResponse<WorkerResult>"), references: ["WorkerResult"], context: FoundationModels.GenerationSchema.SchemaError.Context(debugDescription: "Undefined types: [WorkerResult]", underlyingErrors: []))Second, for some reason, the worker really tends to spawn many workers, which themselves spawn many others, and so on. The number exponentially explodes at runtime.

So for now, I would avoid such an architecture without solid testing beforehand.

Observability

In the demo app, I've implemented a history tab with a full trace of all the instructions, prompts, calls, responses of all LLMs and tools.

It's really instructive to see what happens in real time. You can even tweak the architecture and the prompts and see the changes.

Performance

While profiling the app, I was able to confirm that for my use cases, the overhead of parallelism was minimal, or sometimes even improved performance a tiny bit. It seems like the Neural Engine is made to handle both sequential and concurrent executions of Foundation Models. (which makes sense, since those kind of models can be used anywhere in the system, at any time)

Wrapping it up

I hope I have given you a glimpse of what's possible.

What we did is essentially an automated implementation of Map/Reduce, expressed in natural language.

The LLM is able to decide by itself which tools to call, and to make those calls sequential or parallel.

Think about the concurrency code and all the small details that could be necessary to achieve such a task manually.

Now all you need to do is explain it in plain old English, and it will just work.

There are so many agentic patterns that can be implemented, such as agent-to-agent messaging, persistent memory, federation with other online models, etc.

I encourage you to clone the repository, build the app, and play with it.

For instance, you can try using the same prompt in normal and agentic mode and compare the results. You can also monitor the token usage and tweak the agents and the prompts. Reach out to me with your findings and remarks!

While it's true that we are a bit limited by this first model, I suspect Apple will introduce larger models, as well as online ones.

After all, the framework is called FoundationModels, plural, and the API design kind of gives away the fact that it can be a single interface to multiple models.

With a more powerful LLM, and more advanced tools, the possibilities are virtually limitless. We will be able to do so much more for our users, as we already can with this model.

Did that trigger ideas for your apps? What do you think about those agentic capabilities?

I hope this was useful to you. I have many more experiments from where this one came from, so stay tuned!

This is a guest post by Tarek M. Ben Lechhab, indie Apple developer, freelancer, and AI enthusiast, feel free to take a look at his publication.

Thanks for sharing this! Riding in a Ferrari is amazing, but sometimes it’s nice to actually drive a VW Beetle. I really appreciate how this shows what’s possible with a smaller, local model—it feels much more didactic and approachable. I’ll definitely keep following and recommending your series!