Make an Audio Notes App using Whisper in Swift

Learn how to integrate OpenAI’s Whisper and GPT-4 to create an intelligent voice notes app that organizes and enhances transcribed audio for improved usability and accessibility.

Whisper is an open-source AI model by OpenAI designed for transcription and translation of audio. It was trained on 680,000 hours of multilingual and multitask supervised audio data collected from the web, leading to improved robustness to accents, background noise and technical language.

Limitations and Considerations

While Whisper is extremely impressive, there are a few points to consider when deciding how to best include it in your app:

Visual Information is NOT Included

For example, if you’re building an app to help students record and review class lectures, Whisper can transcribe spoken content accurately. However, it won’t capture visual elements such as notes written on the board or slides from a PowerPoint presentation. This means the transcription may lack key contextual information that was conveyed visually during the lecture. As a developer, you’ll need to find ways to bridge this gap through your app’s UI and features.

Emotional Information and Tone is Note Captured

Like any transcription system, Whisper does not capture emotional nuances or tone in speech. This means that elements such as sarcasm, humor, or emphasis may be lost in the text. For example, a professor’s joke may read as a straightforward statement without any indication of irony. Similarly, enthusiasm, frustration, or urgency—often conveyed through vocal inflection—will not be reflected in the transcription.

As a result, the meaning of certain statements may become ambiguous or misinterpreted without the context provided by tone. If your app relies on preserving emotional intent, you may need to explore additional solutions, such as integrating speaker sentiment analysis or allowing users to annotate transcripts with contextual cues.

Speaker Identification Is Not Included

If you’re building an app to transcribe Zoom meetings or multi-speaker conversations, one key challenge is identifying who is speaking. Whisper does not include built-in speaker diarization, meaning it won’t distinguish between different voices in a transcript. Unless speakers introduce themselves—such as saying, “This is Casey speaking now”—the transcription will appear as a continuous block of text without attribution.

This can make reviewing transcripts difficult, especially in meetings, interviews, or panel discussions where multiple participants contribute. One potential solution is to post-process the transcript using GPT-4. For example, you could prompt it with: “There are three speakers in this transcription. Based on speaking styles and context, make an informed guess at diarizing it.” While this approach won’t be perfect, especially since the speaker voices won’t be included, it can help infer speaker distinctions, making the transcript more structured and readable. To enhance accuracy, you can also provide users with an interface to review, edit, and refine the assigned speaker labels before finalizing the transcript.

The Purpose of Transcriptions

When designing your app, it’s important to consider the true goal of transcription. While users may say they want a transcript or like having one available, what they actually need is the ability to extract specific information from it. A raw transcript alone isn’t always useful—its value comes from how easily users can search, navigate, and derive insights from it. Design your app with this in mind.

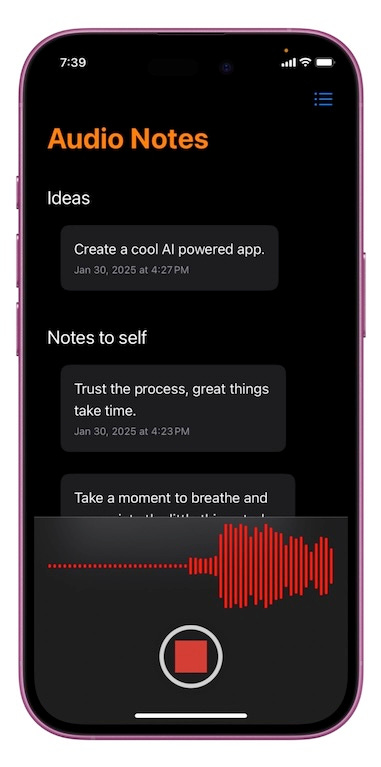

An Audio Notes App

To illustrate these concepts, let’s design a Notes app where users can create a note via voice recordings. Rather than just generating raw transcriptions, the app will intelligently organize the content, making it easy to search, categorize, and extract key insights. In this case, transcription serves as a means to an end—enhancing accessibility and usability—rather than being valuable on its own.

Building the Audio Notes App follows the following process:

Record User Audio and Transcribe Using Whisper

I will not be covering the Swift-specific implementation details, such as how to handle audio recording, in this post. Instead, I’ll focus on the AI integration aspects of the app. Paid subscribers to this blog will have access to the full source code, including the complete Swift implementation.

Since Whisper is open-source, there are multiple ways to integrate it, including using on-device models. For those interested in local deployment, the WhisperKit library is worth exploring. However, hosting a large model locally on an iPhone can consume significant storage, while smaller models tend to have higher error rates, particularly for non-English languages. Due to these limitations, I’ll be integrating Whisper via an API to balance accuracy and performance. If your company has a backend team, they can host the large Whisper model on their own servers and provide a custom API, ensuring greater control over user data privacy and security.

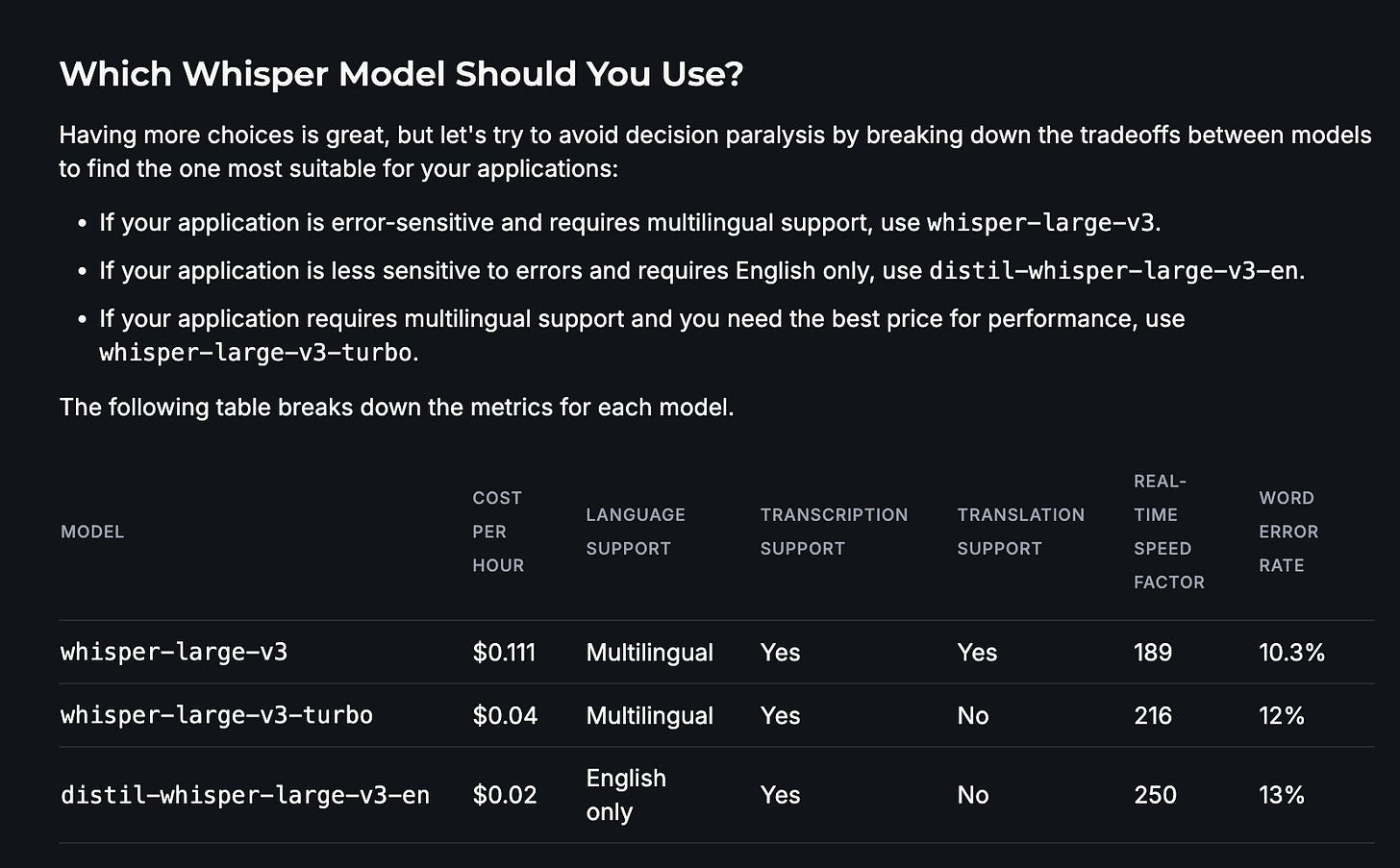

Since OpenAI created the Whisper model, they have a great API for it, which many open source libraries integrate. However, the pricing is high at $0.006 / minute, which adds up to $0.36 / hour of recording. Instead, I will be using the Whisper model hosted by Groq, a company that provides APIs for many open source models, at the cost of $0.111 / hour of recording:

It is also worth exploring Fireworks.ai, another company that hosts and creates APIs for open source models. Their cost for the Whisper model is even cheaper at $0.0015 / min, a total of $0.09 / hour of recording.

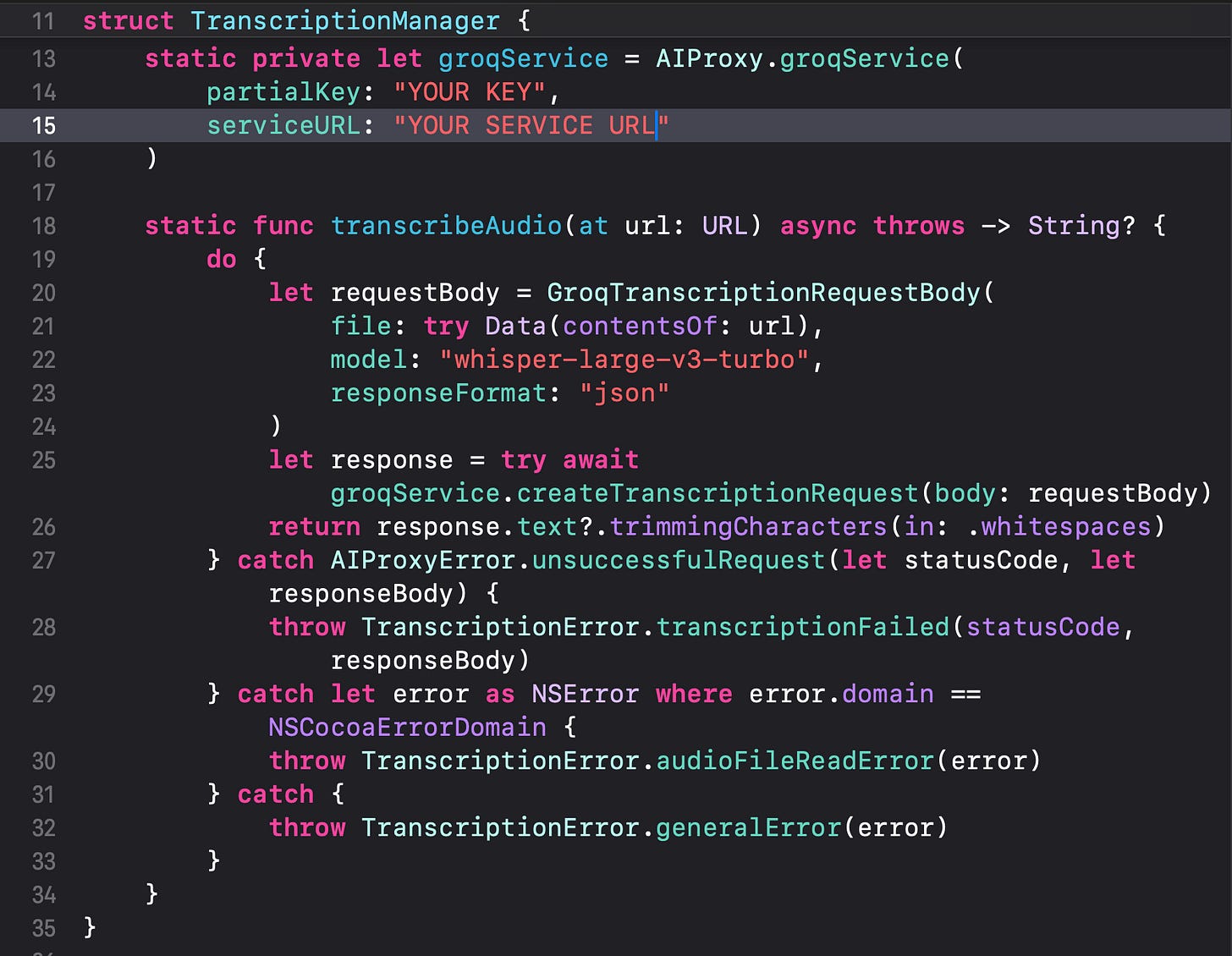

To integrate these APIs, the difficult part for client-side developers who do not want to manage a backend is storing the API keys securely. For this purpose, I’ll be using AIProxy, which makes it very easy to store and manage API keys with checks such as rate limits, DeviceCheck, and specifying endpoints to make sure hackers do not steal and misuse these expensive services. AIProxy also provides the AIProxySwift library to easily integrate with multiple AI service providers, including Groq and OpenAI.

Sign up for Groq to get your API key - make sure to add some money for testing ($5 is more than enough!) - and follow the instructions on AIProxy to store the key and set up DeviceCheck, then get your partialKey and serviceURL to use in the iOS app.

After you set up your credentials and get the user’s audio recording in your app, transcribing the audio via Whisper is as simple as a few lines of code:

That’s it!

Process the Transcription

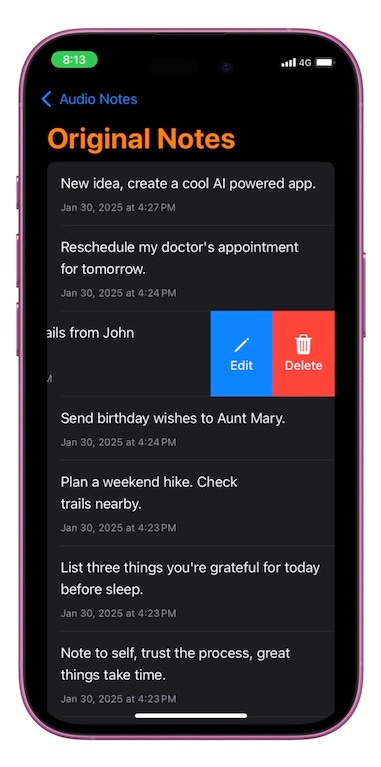

The harder part is processing the transcription. In the case of the Audio Notes app, the Note object is stored in Swift Data, with the transcribed text and the original audio recording is deleted.

The user is given an option to view all the transcriptions via the list toolbar item - and even to delete and modify them (in case there was a spelling error):

But this is not the main part of the app. Instead the goal is to organize all the notes into easy-to-manage categories and sub-categories. To do this, I’ll be using OpenAI’s GPT 4o model. Again - I will be using the AIProxySwift library to store my OpenAI API key and to make the request.

The strategy I use is to send ALL the user notes to the model and get the model to do a two things:

organize the notes into categories and subcategories

modify the notes text if needed to take out any extra irrelevant information

The system prompt I used is as follows:

static private var systemMessage: String {

let dateFormatter = DateFormatter()

dateFormatter.dateFormat = "MMMM d, yyyy"

let today = dateFormatter.string(from: Date())

return """

You are a helpful notes organization assistant. You will receive a list of daily notes, each with an id, text and creation date. Your goal is to analyze and categorize these notes into a clear, hierarchical structure. Today’s date is \(today).

EXAMPLE CATEGORIES:

1. TODO

- Home (household tasks, repairs, maintenance)

- Work (professional tasks, meetings, deadlines)

- Friends (social commitments, events)

- School (academic tasks, assignments)

- Kids (only if children are explicitly mentioned)

- Additional subcategories as needed

2. Thoughts (reflections, personal insights)

3. Ideas (creative concepts, personal project ideas)

4. Other categories as needed

OUTPUT REQUIREMENTS:

- Organize notes chronologically within categories.

- Rephrase notes for clarity and action (e.g., change “let’s buy sugar” to “buy sugar”).

- Include dates ONLY if they are explicitly relevant (e.g., “by Friday”); exclude creation dates unless they are meaningful deadlines.

- Do not assume a category (especially “Kids”) unless it is explicitly mentioned.

- **If a note contains a repeated prefix like “Note to self:”, transform that prefix into a category (e.g., “Notes to self”) and remove the repeated text from each note’s content.**

- Remove redundant prefixes or markers (e.g., “Task: …”) when they are clearly placed under a suitable category.

- Do not add explanatory text, meta-commentary, or empty categories.

- Return ONLY the categorized notes, with no additional commentary.

"""

}To organize the notes, I want to use OpenAI’s structured outputs - a json-based response so that I can manage the output and data in my own app. My response schema was as follows:

// NotesOrganizationManager.swift

static private let notesSchema: [String: AIProxyJSONValue] = [

"type": "object",

"properties": [

"notes": [

"type": "array",

"items": [

"type": "object",

"properties": [

"id": [

"type": "string",

"description": "Unique identifier for the note being references from the provided initial notes dataset"

],

"modified_text": [

"type": "string",

"description": "Processed or modified version of the note text"

],

"category": [

"type": "string",

"description": "the category that this note is classified into"

],

"subcategory": [

"type": "string",

"description": "the sub-category that this note is classified into, if any"

]

],

"required": ["id", "modified_text", "category"],

"additionalProperties": false

]

]

],

"required": ["notes"],

"additionalProperties": false

] Finally my function to analyze and categorize the notes using the AIProxy library is as follows:

static func analyzeNotes(_ notes: [Note]) async throws -> [CategorizedNote] {

if notes.isEmpty { return [] }

let dateFormatter = DateFormatter()

dateFormatter.dateFormat = "MMMM d, yyyy"

let notesText = notes.map { note in

let formattedDate = dateFormatter.string(from: note.createdDate)

return "* \(note.id): \(note.transcript) (\(formattedDate))"

}.joined(separator: "\n")

let userMessage = """

Please analyze, rephrase, and organize the following notes:

\(notesText)

Please categorize these notes into appropriate categories and modify their text as makes sense for the category.

"""

do {

let requestBody = OpenAIChatCompletionRequestBody(

model: model,

messages: [

.system(content: .text(systemMessage)),

.user(content: .text(userMessage))

],

responseFormat: .jsonSchema(

name: "CategorizedNotes",

description: "Array of note objects with their classifications",

schema: notesSchema,

strict: false

)

)

let response = try await openAIService.chatCompletionRequest(body: requestBody)

guard let jsonString: String = response.choices.first?.message.content,

let jsonData = jsonString.data(using: .utf8) else {

throw NotesManagerError.invalidResponse

}

let categorizedNotes: CategorizedNotes = try JSONDecoder().decode(

CategorizedNotes.self,

from: jsonData

)

return categorizedNotes.notes

} catch AIProxyError.unsuccessfulRequest(let statusCode, let responseBody) {

throw NotesManagerError.openAIError(

statusCode: statusCode,

response: responseBody

)

} catch {

throw NotesManagerError.underlying(error)

}

}

}Now the app can process and store the new modified text, categories, and subcategories for each note!

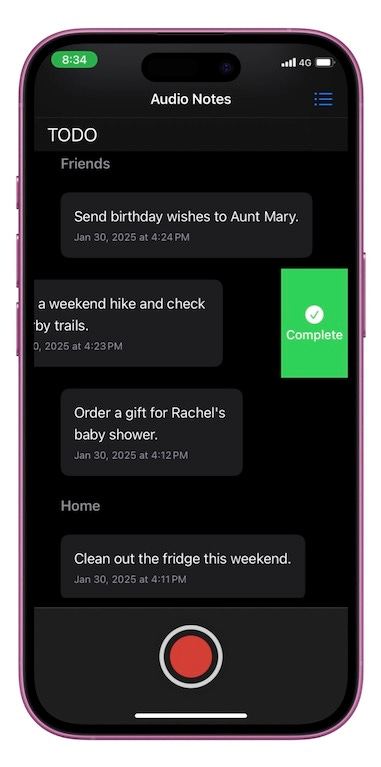

Display the Categorized Notes to the User

The final step is to show the transcribed notes in a new and useful way to the user, allowing the user to complete their tasks / ideas / thoughts:

Conclusion

OpenAI’s Whisper model makes it remarkably easy to create new voice-based interfaces for Apple apps. However, it’s important to go beyond simply transcribing audio and focus on innovative ways to transform user input into meaningful and actionable experiences. By rethinking how voice data is organized and presented, you can deliver more value to users and enhance the overall functionality of your app.

By combining the power of Whisper for accurate transcription and GPT-4 for intelligent organization, we’ve created an app that transforms raw voice recordings into structured, actionable notes.