Using the FoundationModels Framework for Streaming from external LLM providers

In Xcode 26 Beta 4, we can use GeneratedContent with json to stream responses from external LLM providers, such as OpenAI, Anthropic, Gemini and more!

It’s no argument that the FoundationModels framework design is absolutely brilliant. But given the actual model’s limitations (e.g. extremely limited supported languages, small context window, etc), it cannot be used for many use-cases and apps. And especially for more complex use-cases, most apps that want to integrate LLMs will continue to rely on external LLM providers such as OpenAI, Anthropic, Gemini, etc.

So I was extremely excited to see this X post from @_julianschiavo announcing that we can now use the same FoundationModels API design to work with external LLM providers!

In this post, I’ll walk you through how to do this to build an AI-powered Financial Analyst app using the Perplexity API for searching through company-specific financial data.

The AI-powered Financial Analyst App

The Financial Analyst app is simple - the user can simply choose from a list of companies they want financial reports on, include the time range for that financial information, and ask any custom questions if they’d like.

The Perplexity API is then used to get back a structured response, which is streamed as new tokens come in and the UI is built with the latest streamed generation:

So how do we build this?

Streaming the Perplexity API Response

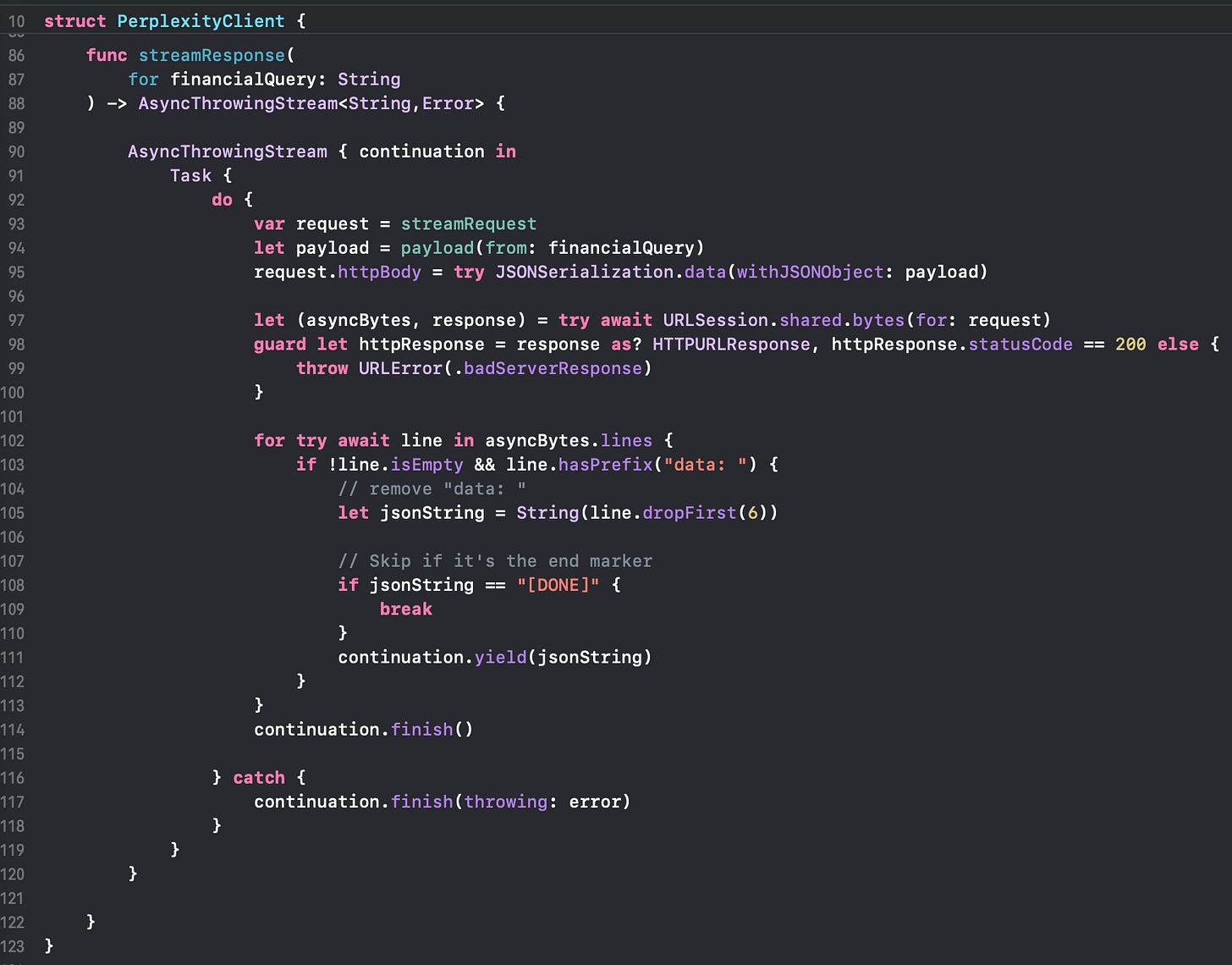

Streaming an API response line-by-line has been available in iOS APIs through AsyncThrowingStream for some time, so this part is as simple as this:

Notice that the stream will return back the json string from the response.

I won’t go deeper into the prompt and other specific variables that are used to make the streaming request as this is just the basics of building an API call, but you can view the full PerplexityClient code here.

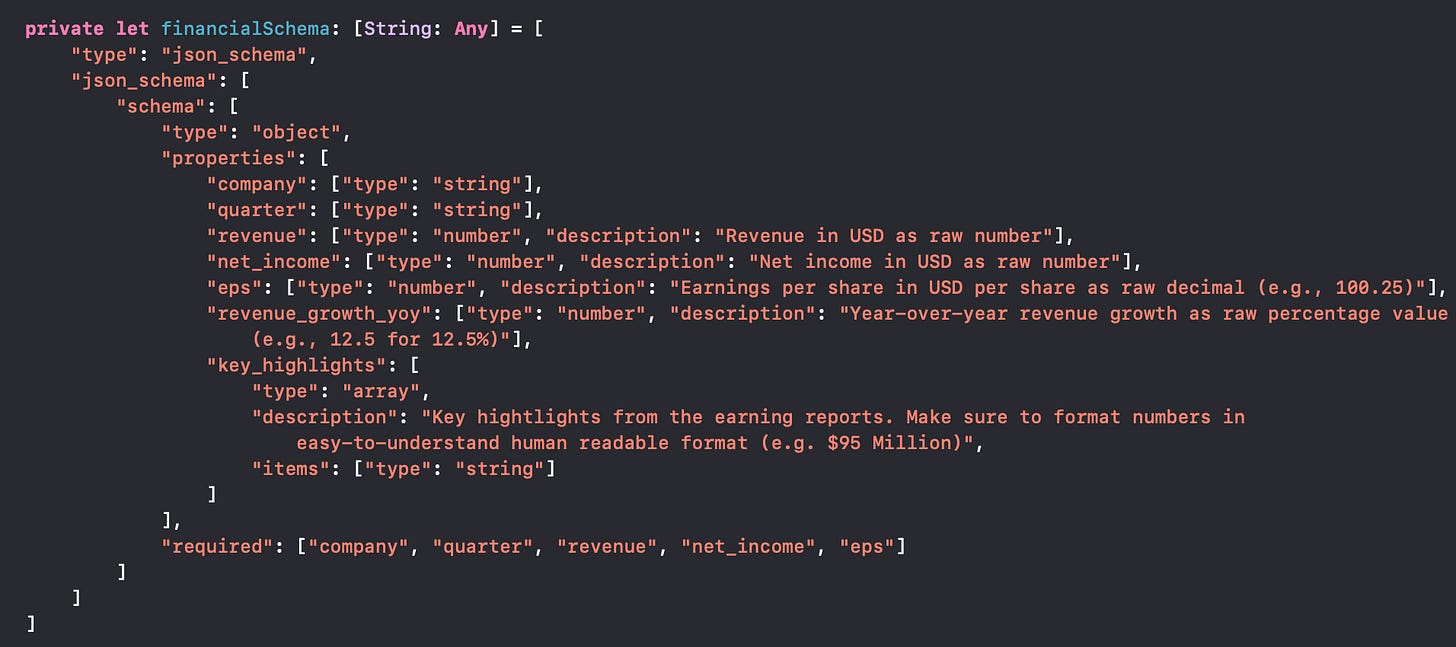

I’ll just point out the json schema that is passed to the Perplexity API as this is what we expect to get returned as a structured json response from the model:

Using Generable Types for Decoding

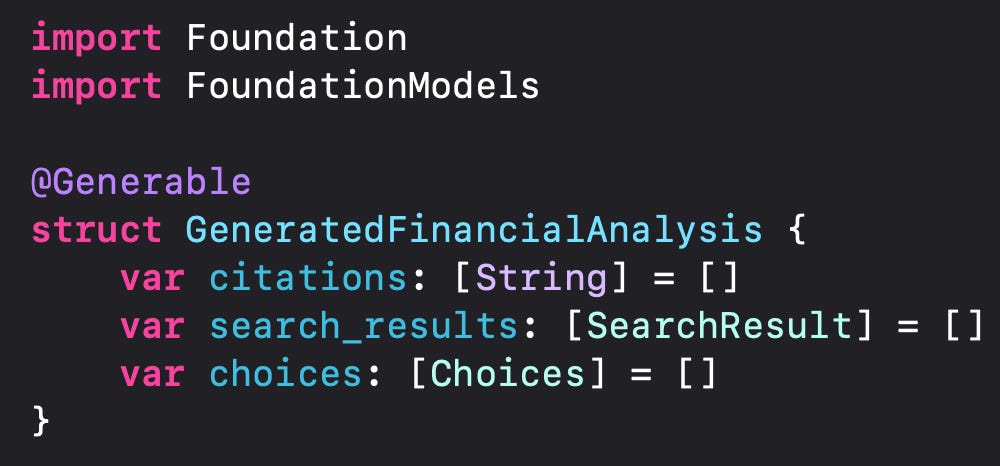

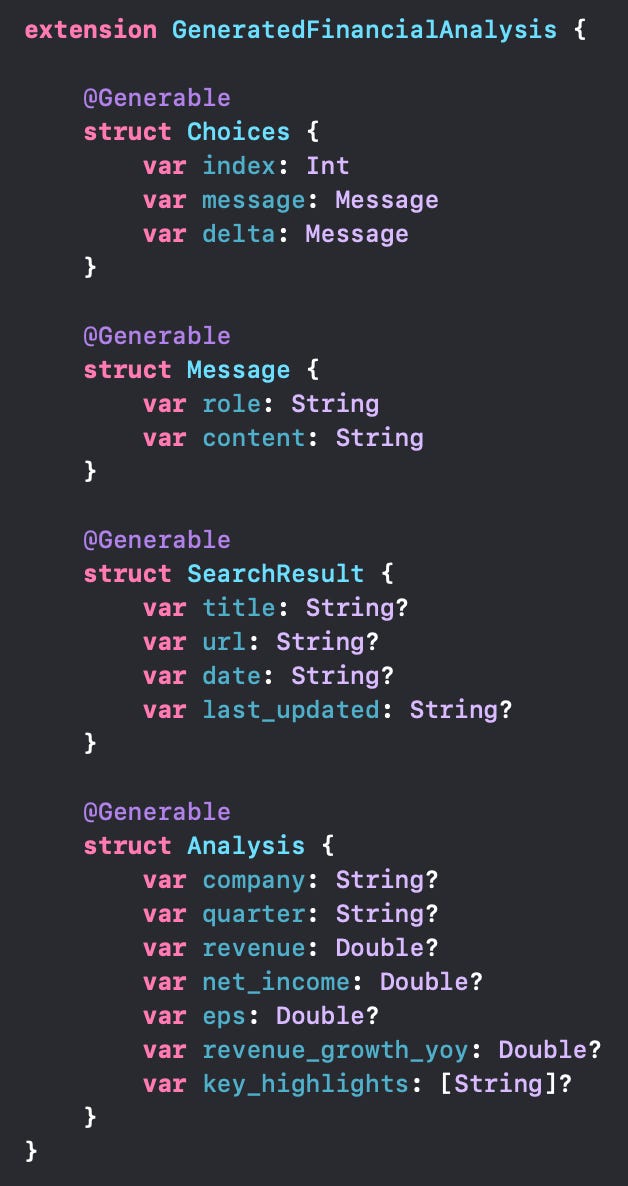

Normally we would be creating Codable objects to decode the API response, but now we can simply use the Generable type from the FoundationModels framework instead!

The Generable type is now Codable by default, so it just works! But one issue is that as of now (Xcode 26 Beta 4), you cannot add CodingKeys. This means that if the api returns snake case (e.g. search_results), you cannot add a CodingKey to use searchResults as a variable. You have to name each variable EXACTLY how they are in the API response.

Generating a Generable Object from JSON

When the Perplexity API returns back the response, the structured output we requested with our json schema, which corresponds to the Generable Analysis object above will be returned simply as a json string in the assistant message content.

However, here is the problem… the json response will be generated one token at a time:

So how can we decode it? This is the magical part!

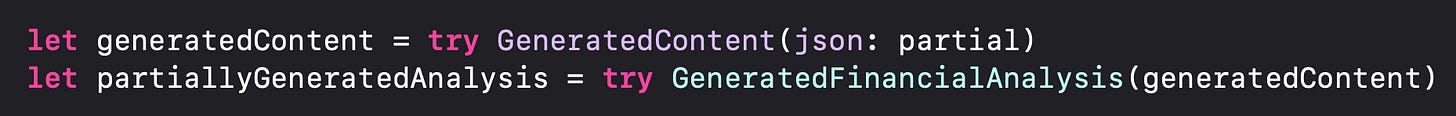

First, create a GeneratedContent object from any partial json string!

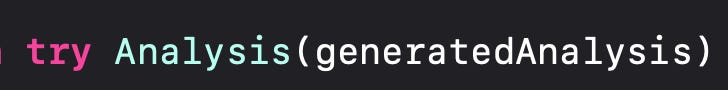

Then pass that generated object into the Generable object that the json represents:

This will start generating the Analysis as soon as any of the information from the partial json string comes in and can be parsed!

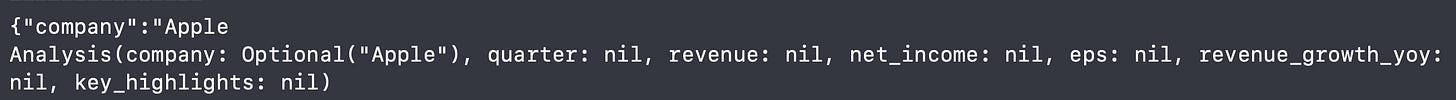

For example, here the company name came in, so now the company is available!

The final result to get an analysis object from the API response is as follows:

In the same way, to generate the GeneratedFinancialAnalysis object, we will pass in the json response from the Perplexity response stream every time it comes in:

This will generate the current version of the Analysis object from the partial json string!

Updating the UI

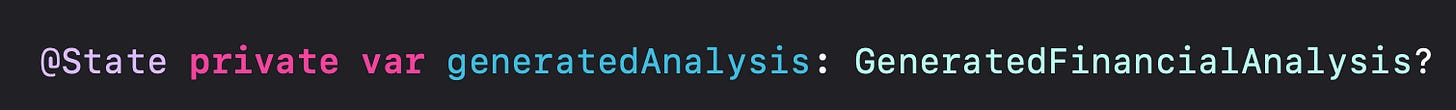

Live-updating the UI as the stream comes in with more and more information each time is as simple as just updating the generatedAnalysis State variable every time the new response with additional data comes in through the stream:

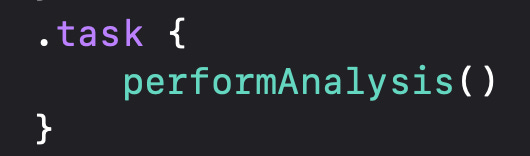

Simply performAnalysis when the view loads:

And keep replacing the generatedAnalysis variable with the latest version. Here is the full performAnalysis function:

You can view the full AnalysisView code here.

Conclusion

That’s it! It only takes a few steps to use the Generable macro from the FoundationModels framework to create an impressive streaming experience with external LLM providers.

Simply:

Stream the LLM Provider API response as a json string

Use Generable types for decoding the json

Update the UI to the latest streamed generable object

The FoundationModels team once again does an impressive job with allowing us to use the same developer experience for external models!

Now, back to building 🤓

P.S. - The full code for the Financial Analyst app is available on Github here.

Nice write up, thanks Natasha!

This still requires iOS 26, right?

I prefer using Firebase because it handles streaming and provides a proxy (needed to hide API key).