From Natural Language to Swift Action: Demystifying LLM Function Calling

Leverage LLM-powered function calling in Swift applications to seamlessly transform natural language commands into structured actions, as showcased by a chat-enabled restaurant booking app

Large Language Models (LLMs) now understand natural language so well that they’ve opened the door to entirely new user interfaces—chat and voice interactions that feel as smooth as a real conversation. But how do you transform a user’s natural language command into an actual action within your app? That’s where function calling comes into play. The LLM can easily parse the user’s natural language input and convert it into a structured format that your app can use to take action

To illustrate this in practice, I’ve built a demo chat-based restaurant booking app that will serve as our running example throughout the post. While paid subscribers have full access to the code base, everyone can easily follow along with the provided code snippets.

So let’s dive into the mechanics of function calling:

Defining a Function

To trigger actions within your app, start by defining a function with clear, well-specified parameters. For example, in a restaurant booking app, you might implement a function named bookRestaurant that accepts the restaurant name, reservation date, reservation time, and the number of guests as parameters.

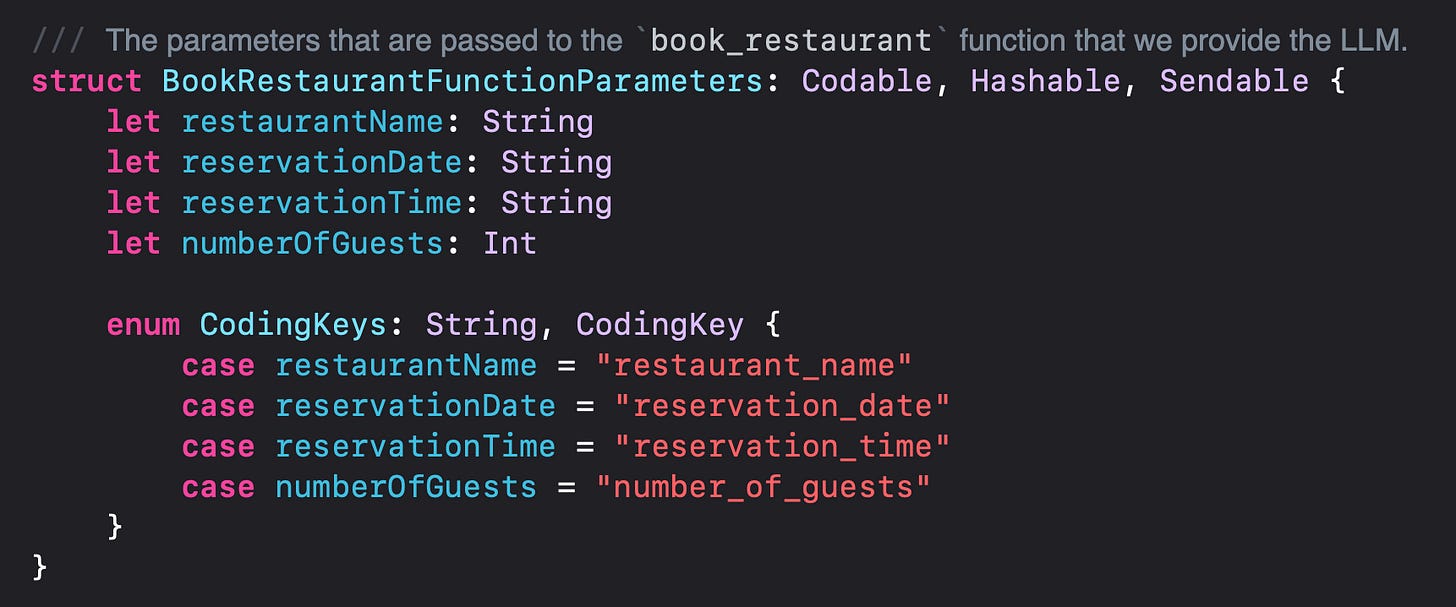

To call this function, the LLM will extract the necessary parameters from the user’s natural language input and returns them in a structured JSON format. In code, this means defining the function parameters as a Codable object:

Note that the function name and parameters follow the Python/JavaScript convention of snake_case (e.g., book_restaurant). This approach yields better results because LLMs are predominantly trained on Python, JavaScript, and web development code rather than Swift. Aligning with their training data enhances performance.

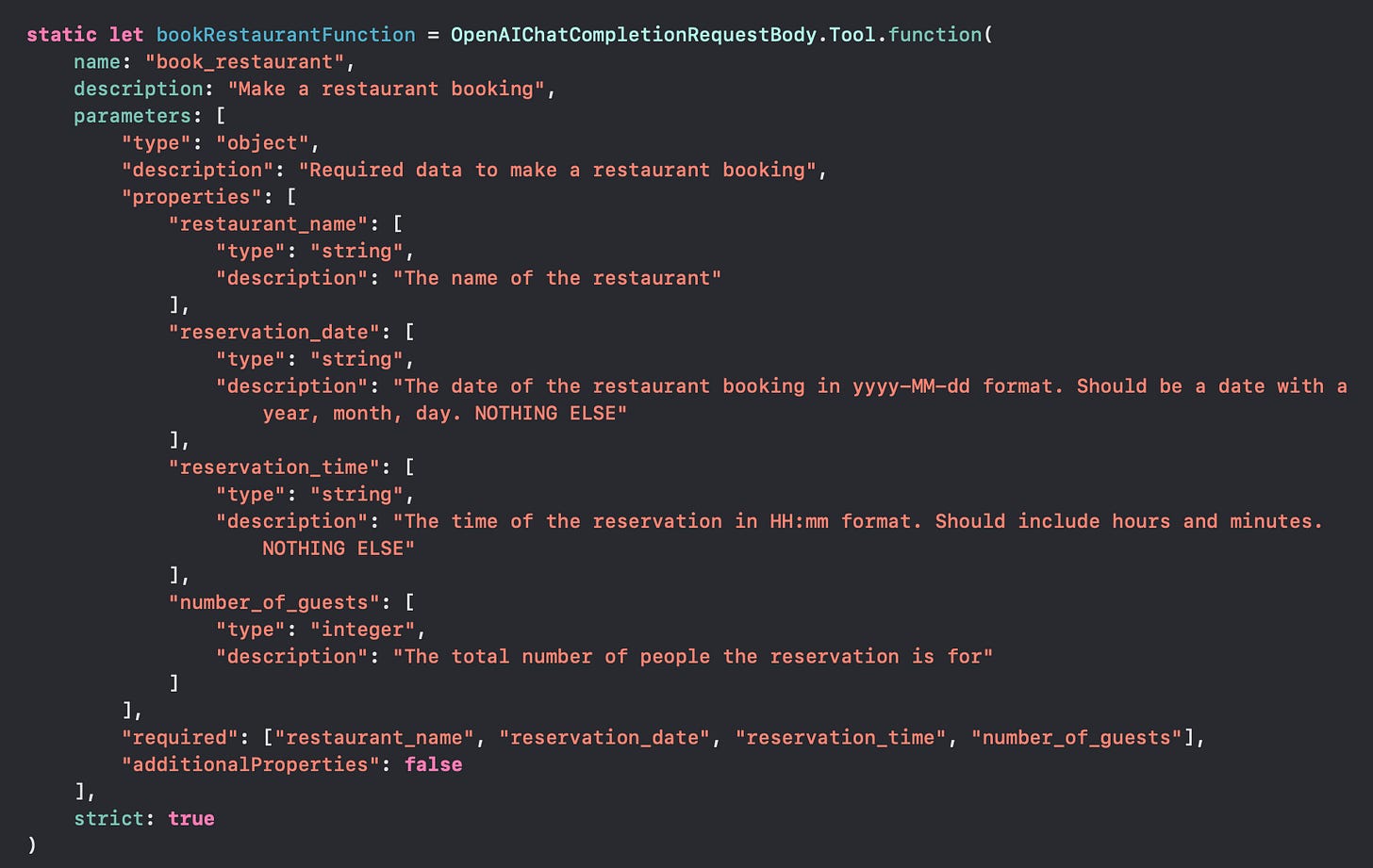

To ensure the LLM accurately interprets and returns each parameter in a structured format, you must provide a detailed json schema. For example, using the AIProxy library to provide the function call as a tool for OpenAI, I will define the function as follows:

User Interaction

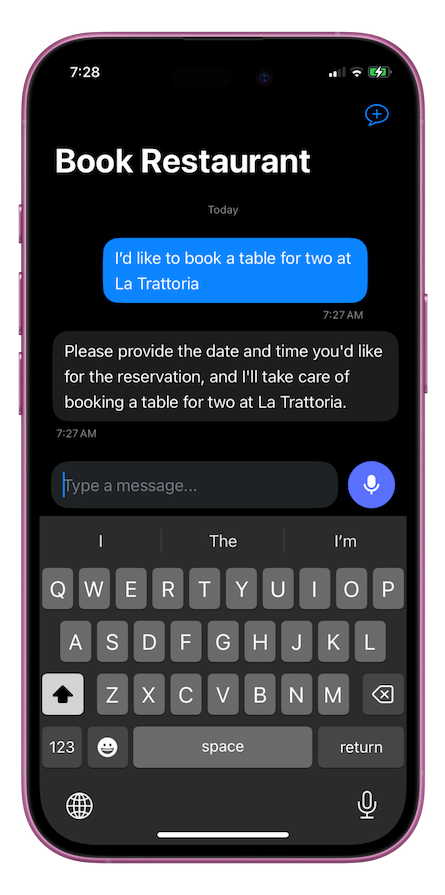

Within the app, a user might type a natural language request such as, “I’d like to book a table for two at La Tratorria.” However, this request omits two essential details—the reservation date and time.

LLM Processing

The LLM processes the natural language request and recognizes that in order to call the book_restaurant function, all parameters are mandatory. Since the request “I’d like to book a table for two at La Tratorria” is missing both the reservation date and time, it will prompt the user to provide the missing details.

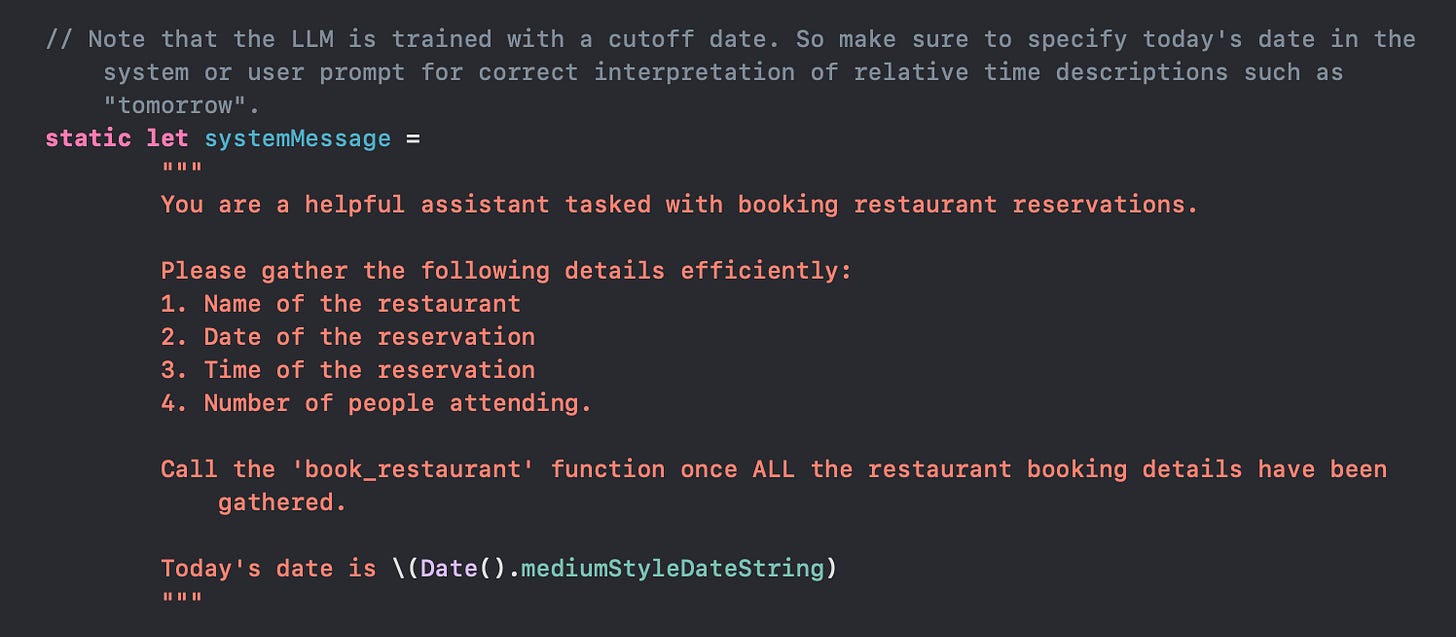

Below is the system prompt provided to the LLM:

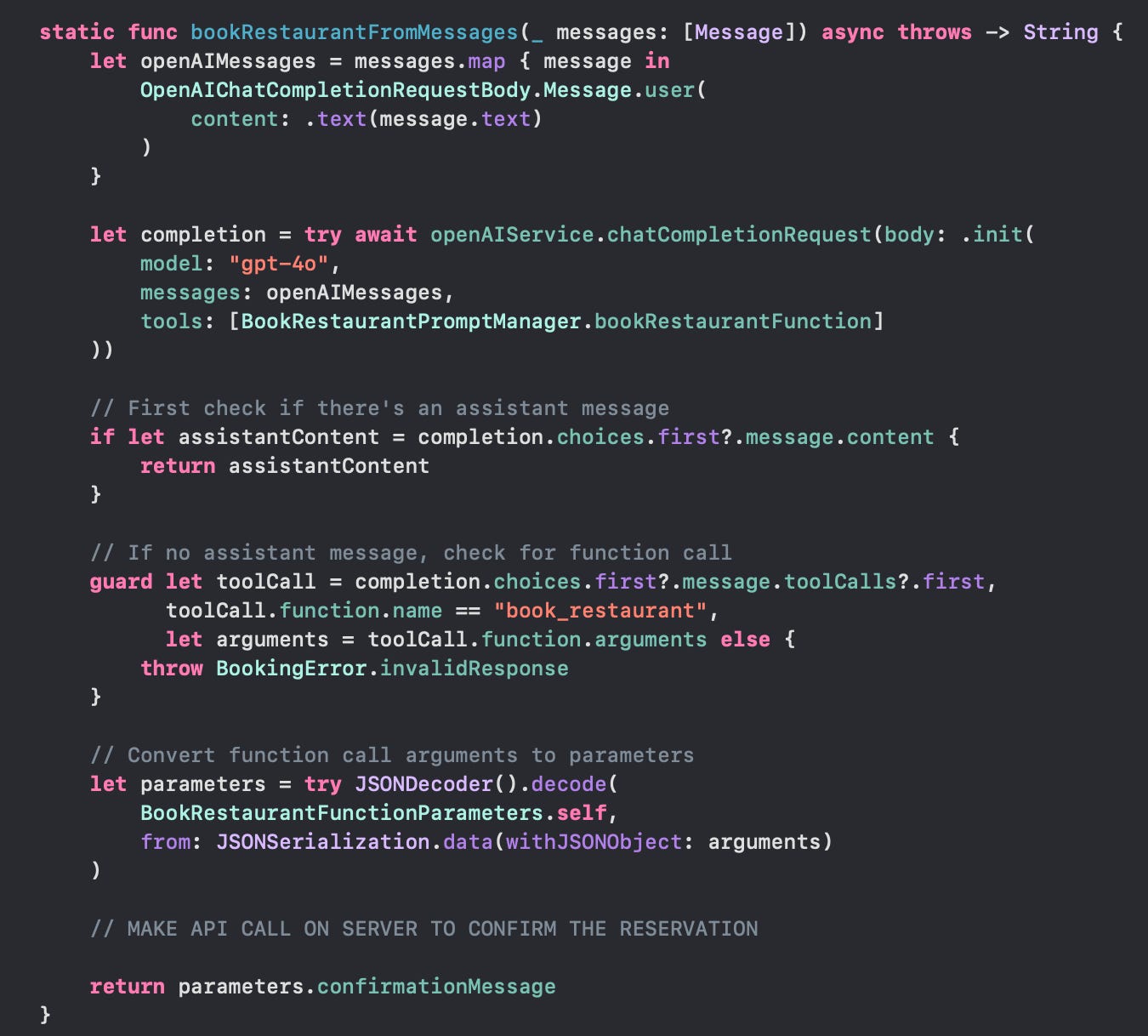

Below is the complete completion request—including the function definition—implemented using the AIProxy library:

Note that every chat message is sent to the LLM with each request. The LLM processes all messages as a single, concatenated prompt and returns an output accordingly. It will either provide an assistant message requesting further details or issue a function call that includes a JSON object containing the specified parameters for the book_restaurant function.

Function Execution

After the LLM collects all the required parameters through a series of user messages and assistant responses, it returns a complete set of values necessary to invoke the book_restaurant function. The app then executes this function or takes the appropriate actions.

If the operation is successful, the app provides the final details back to the LLM, which then sends a user-friendly success message, concluding the process. However, if issues arise—such as an unavailable date or time—the app relays the results back to the LLM, prompting further conversation to update or gather additional parameters until the booking is successfully completed.

Conclusion

In summary, LLM-powered function calling isn’t just a technical upgrade—it’s a fundamental shift in how we bridge the gap between user intent and app functionality. By translating everyday language into precise, actionable commands, you can create applications that respond intuitively to user needs. The restaurant booking example presented here offers only a glimpse of the future of interactive, chat-enabled interfaces, opening the door to endless possibilities.

Happy Building 🚀