Swift + OpenRouter: One LLM API to Rule Them All

Learn how to integrate OpenRouter’s Chat Completions API into your Swift app for unified, low‑latency access and billing across hundreds of LLMs.

As major companies included OpenAI, Google, Meta, Anthropic, DeepSeek, Alibaba, etc continue to release LLMs at an insane speed - we’re talking a handful of releases every week! With each model offering something different: faster responses, lower costs, bigger context, specialized skills and so on. We, as developers, are responsible for experimenting with them to figure out which model is the fastest, cheapest, but at the same time, best at accomplishing the tasks that are specific to our applications.

This is easier said than done, especially for us as Swift developers, where the developer ecosystem is slow to develop. I’ve been a big advocate of the AIProxySwift open source library, which does implement code across different model providers. But it still leaves enough provider‑specific quirks that swapping between them isn’t as seamless as it should be, especially when you consider that virtually every LLM now supports the same OpenAI‑standard Chat Completions endpoint.

This is where OpenRouter comes in. OpenRouter is a single, OpenAI‑compatible API gateway that puts every major LLM under one unified roof. Simply point your request at OpenRouter’s /chat/completions endpoint, use the same message format you’d send to OpenAI, and behind the scenes it will automatically route your call to the ideal model - whether that’s Anthropic’s Claude, Google’s Gemini, Mistral, or any of the hundreds of others.

It handles tokenization differences, routing to a different provider when your first-choice provider is down, and even cost‑based routing to keep your bill in check. Oh, and you can monitor your billing across different model providers in one place! For Swift developers, this means you import one client, set one base URL, and suddenly you have instant access to the entire LLM ecosystem - switching between models becomes as simple as changing a single parameter.

Despite all these features, OpenRouter adds only about 30 ms of extra latency, so your chat completions stay fast no matter which model you choose. Pricing is just as transparent: you pay the exact per‑token model rate as from the model provider, which is listed clearly on their website, plus a small surcharge when you buy credits. From my experimentation, a $5 top‑up cost $5.64 total (0.64 cents), and a $10,000 purchase came out to only a $526.69 fee.

The Implementation

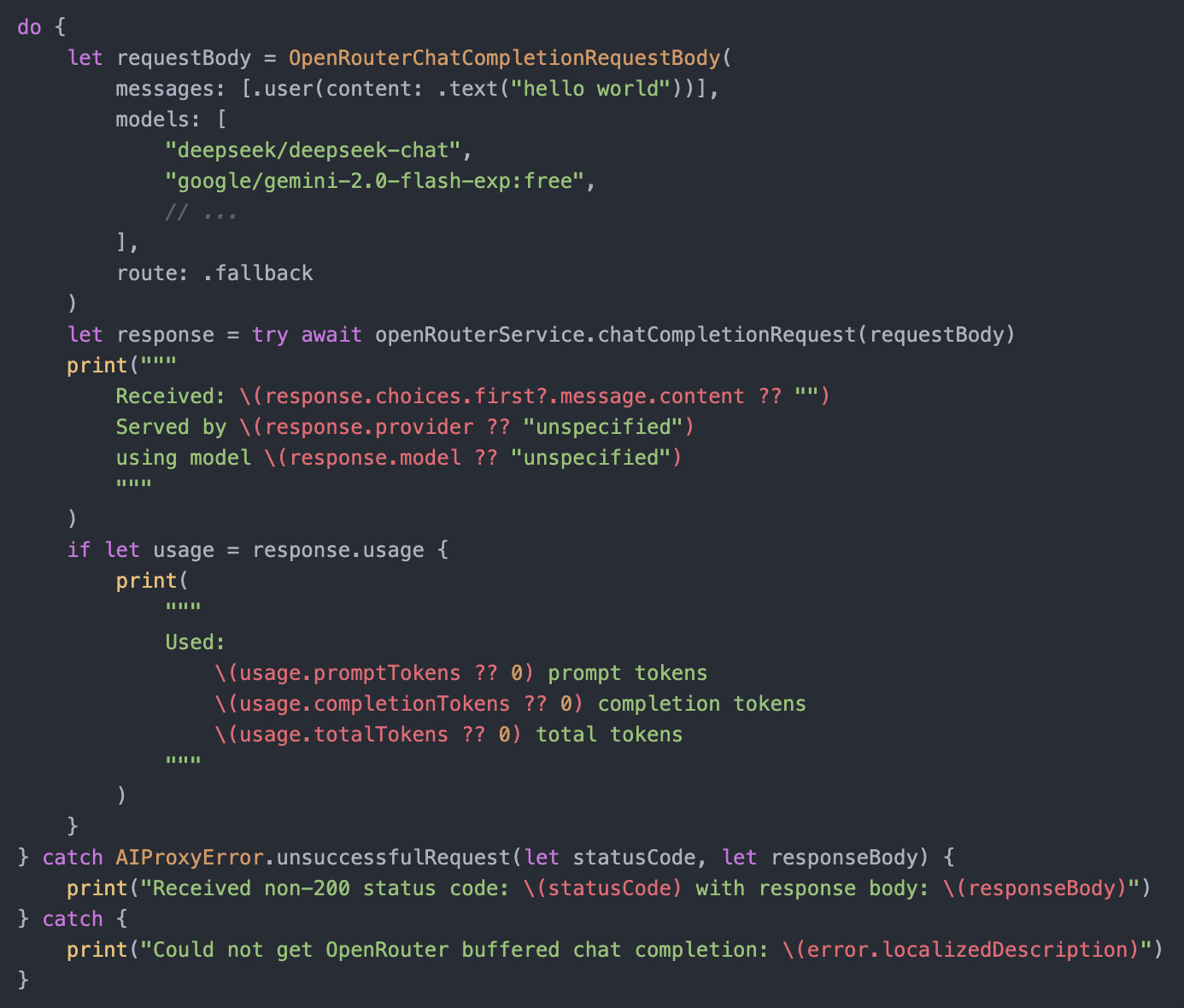

The good news is that AIProxy has already implemented OpenRouter into their library. See docs here - the basic implementation is as simple as:

Note how you can pass in a list of models that you want to prioritize for your app as fallbacks in case rate limits are reached or there are system outages.

Conclusion

OpenRouter is delivering on the promise of a single, OpenAI‑compatible Chat Completions API that gives you instant access to hundreds of LLMs with much-needed features such as provider failover, cost‑based routing, and unified billing visibility with only ~30 ms of latency.

For Swift developers, that means one client, one base URL, and no more juggling SDKs or provider‑specific quirks. Plus, with AIProxySwift’s built‑in OpenRouter support, you can flip the switch in minutes. This is not only great for experimentation across models, but also for agentic workflows, where different LLMs are likely suited better for different tasks, and integrating multiple LLMs is necessary.

Thank you Natasha. Do you think there's a benefit to switch to OpenRouter when we benefit from Azure's startup credits? (As share by you in a previous post).

I'm worried about Azure's performance.

Plus I heard that OpenRouter offers many free to use models. (As long as you add a little bit of credit top up, that won't be used anyway for free models)