Let’s Build a SwiftUI Study‑Plan App using FoundationModels StreamResponse

Learn how to build a real-time, token-streaming Study Plan app in SwiftUI using Apple’s FoundationModels framework and its powerful StreamResponse API.

This is a continuation of my beginner series about Apple’s FoundationModels Framework. Read Introduction to Apple's FoundationModels: Limitations, Capabilities, Tools first.

One of the most mind-blowing API design features of the FoundationModels framework is how it integrates with SwiftUI. Specifically, how you can show the results to the user as the model is generating them token-by-token. Let me show you the magic in the example Study Plan app I built:

So how does this work? It’s super simple!

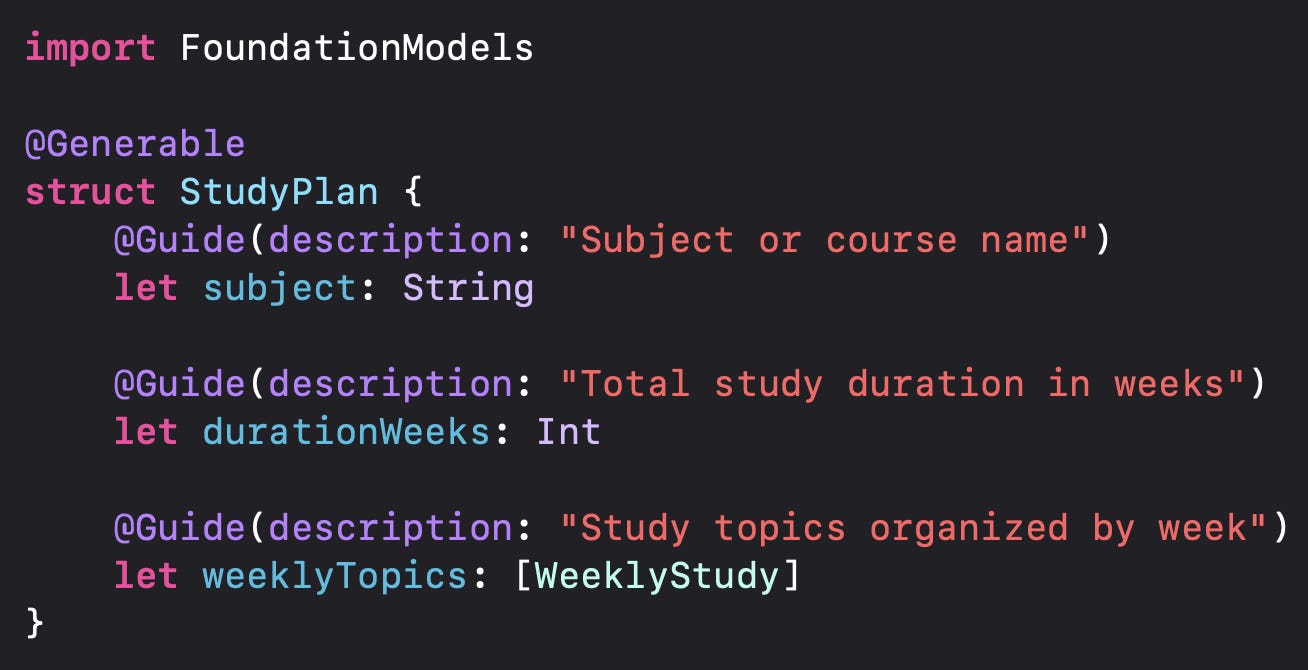

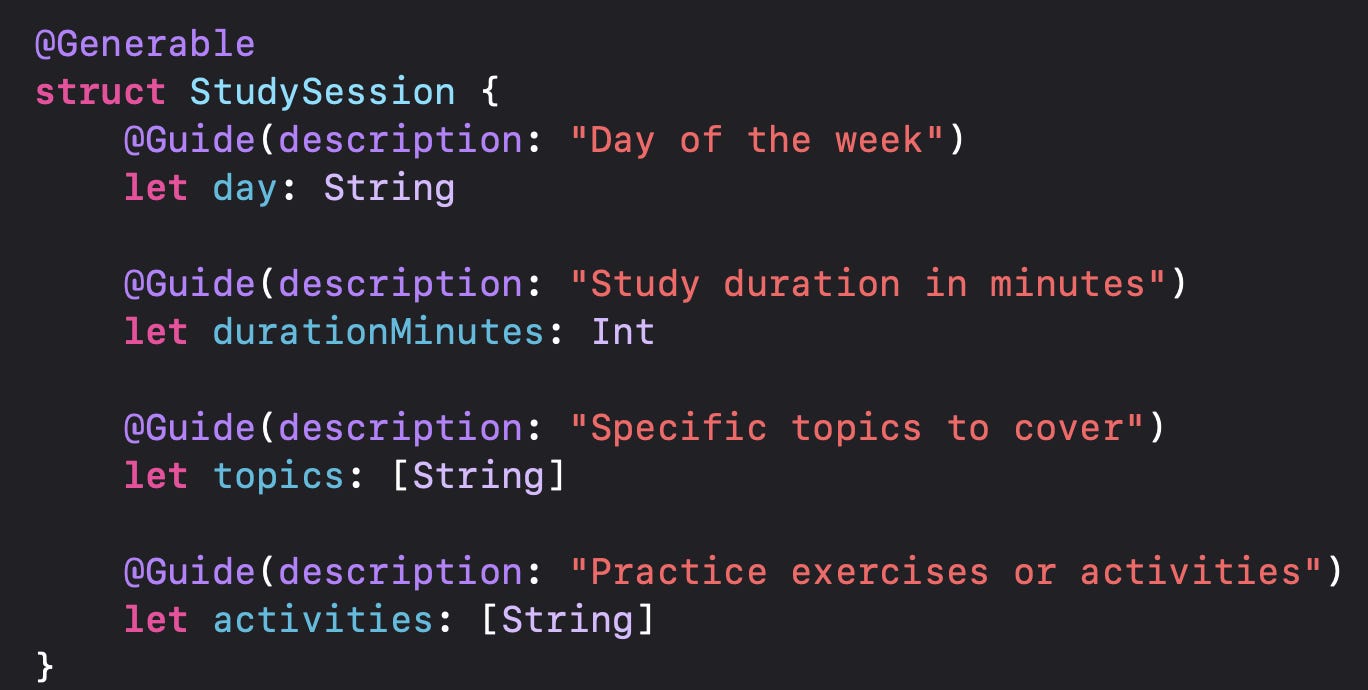

Define the Generable Models

The first step is to define the models that the FoundationModels framework will generate. For the Study Plan app, it’ll be a StudyPlan model with WeeklyStudy topics with StudySessions:

Note that the FoundationModel will generate ALL fields regardless of whether they are being displayed to the user or not. So if you have a bigger model that has some non-generated content and some generated content, keep the generated content as separate objects, and just sync the generated info back into the bigger model (maybe saved in SwiftData) once it fully generates. Keep the Generable models as separate entities only used for generating information as needed!

The UI

One option is to build a chat-based UI for getting the user’s study plan preferences that are needed as input to the model. However, I would argue that this is more difficult for the user than building a much more quick and simple input screen, especially if you do have extremely specific input:

I would argue that even though we’re used to the chat-based interfaces of ChatGPT, it might not be the best UI for most use-cased on mobile. Especially when it’s as simple as the form above.

Once the user enters the Subject of their study and clicks on “Generate Study Plan”, the model is ready to stream!

Once the app gathers the required information from the user, it can be put into the prompt.

The Prompt

Using the specific input values of the user, the prompt is generated as follows in the StudyPlanViewModel:

Again - keep in mind the context window here. The longer the prompt, the less context for output tokens… So it’s a balance. You want the prompt to be specific, but not so long so that it eats up the context window. This is where it’s important to test out shorter versions of the prompt to provide the same quality level of output.

PartiallyGenerated

Let’s go back to running a “Hello, World!” prompt in the FoundationModels framework:

As you can see, the model took 1 second to respond with “Hello! How can I assist you today?” This seems super fast, and it is! But as your prompt and output requirements become bigger and bigger the time can significantly slow down.

Since we don’t want the user starting at a spinner, the FoundationModels API provides a nice streamResponse function for the session that will stream the response as it is generated token-by-token by the model:

Notice that it first printed “Hello”, then continued to “Hello! How can I assist” then “Hello! How can I assist you today?”. Each of these output is of String.PartiallyGenerated type which is output by the stream:

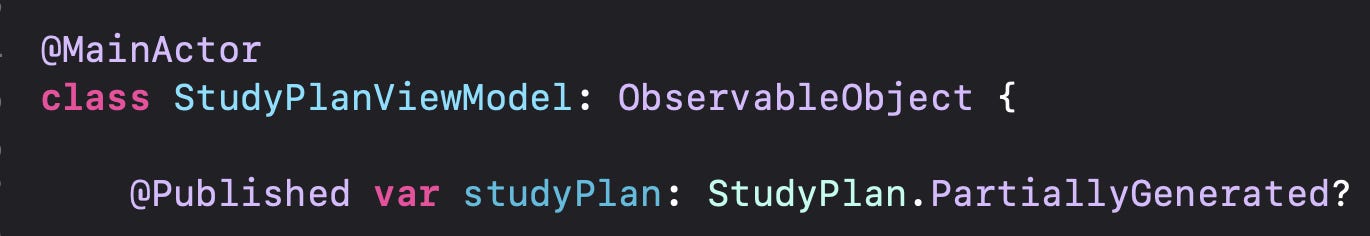

The Generable macro will automatically create the PartiallyGenerated type for any object in the same way - so nothing else is needed to be done to our custom StudyPlan project for this to work!

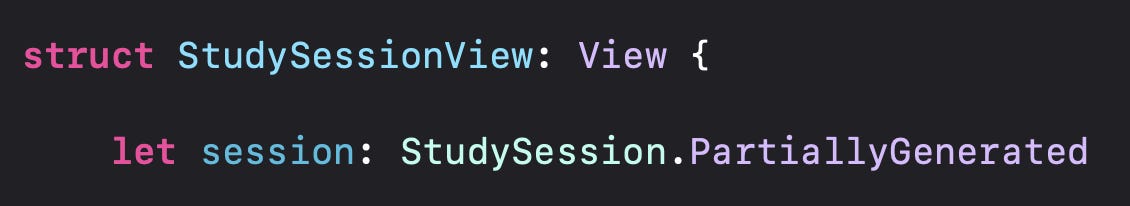

We simply use the PartiallyGenerated type directly in the View or ViewModel! That’s it!

The same applies for other embedding Generable objects inside the StudyPlan!

The only difference here is that every variable of the PartiallyGenerated object can be nil even if the variable is required in the original object!

For example, my StudyPlan object with all required parameters:

Becomes this as a PartiallyGenerated object that I can view by expanding the Generable macro:

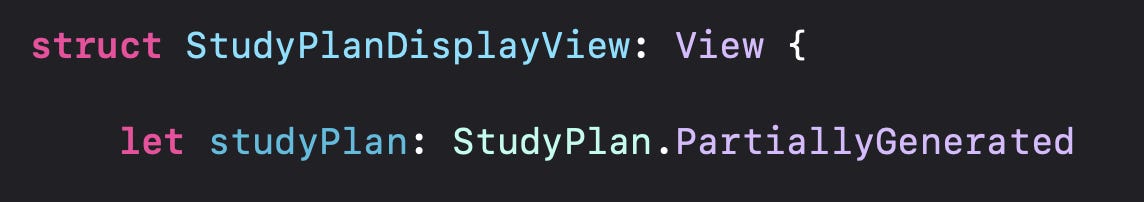

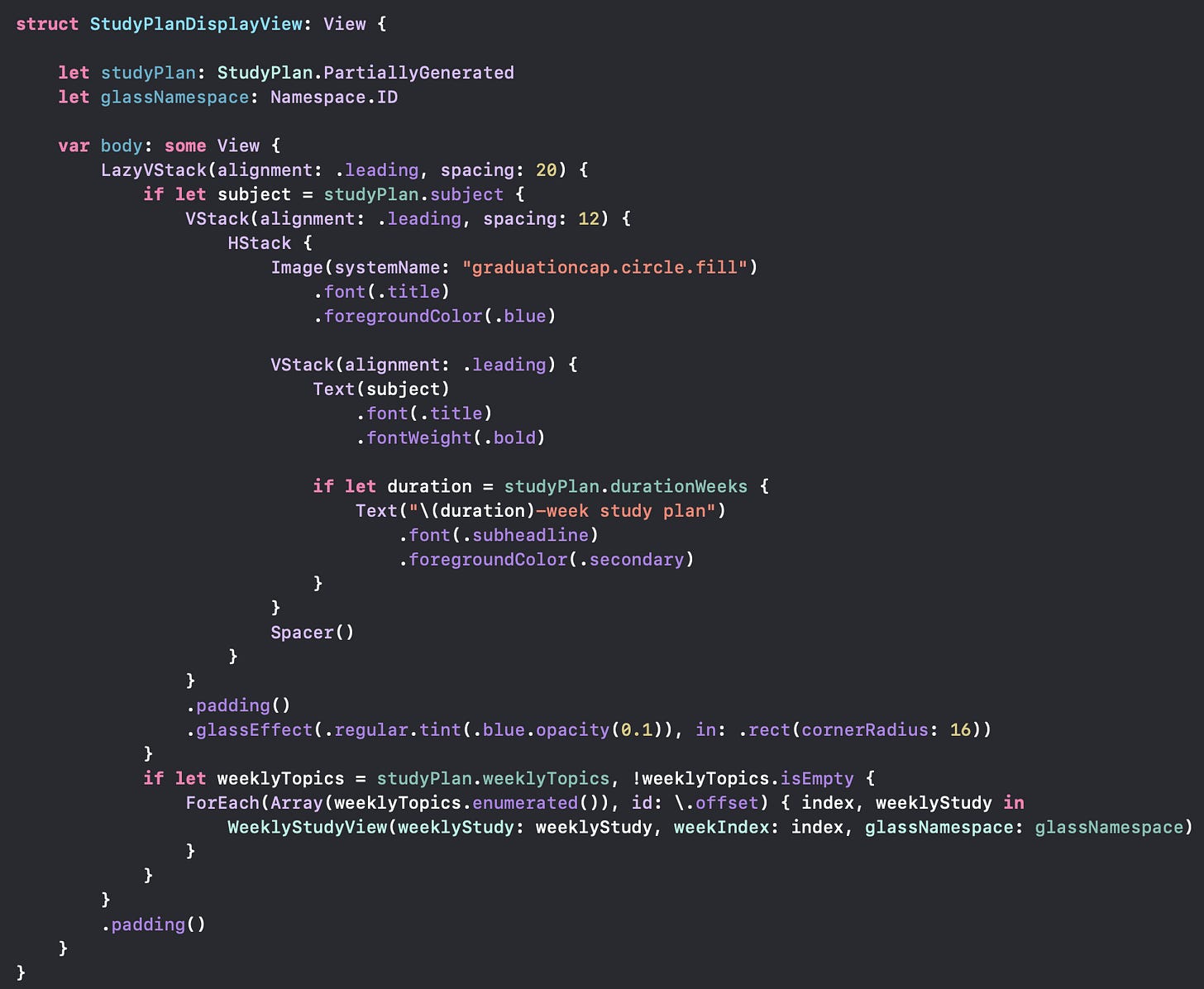

These PartiallyGenerated objects are then used in the same way to display the information as the originals (but keeping in mind that every value may be nil!). They will just keep changing as the model is generated! For example, here is the StudyPlanDisplayView:

As each field is generated, the view gets filled out magically!

Update the PartiallyGenerated Object

After the user fills out their study plan parameters in the UI and click the “Generate Study Plan” button, we will use the LanguageModelSession’s streamResponse function to generate the study plan inside the view model.

The key here is to always update the StudyPlan.PartiallyGenerated object with the latest partial generation from the stream!

As the objects keeps getting updated, make sure to set up your view in a way that will automatically update it with the view model as a StateObject:

Later on in the code where the study plan is displayed:

That’s it!

Error Handling

There are special errors that can be generated during the model’s generation process:

There are already many errors and new errors may be added in the future as the API expands!

Conclusion

Apple’s FoundationModels framework makes it remarkably easy and surprisingly delightful to build intelligent, real-time generative apps in SwiftUI. With just a few lines of code, you can stream output token-by-token, giving users immediate feedback and a far more engaging experience than waiting for a full response to load. By using the Generable macro and leveraging PartiallyGenerated types, you can seamlessly display evolving content in your UI as the model generates it!

Nice example of new framework. Did you ever faced rate limit during creation? Just wondering 🤔